# Run this cell to set up packages for lecture.

from lec05_imports import *

Agenda¶

- Querying.

- Querying with multiple conditions.

- Grouping.

- After class: challenge problems.

Don't forget about these resources!¶

You belong here! 🤝¶

- We're moving very quickly in this class.

- This may be the first time you're ever writing code, and you may question whether or not you belong in this class, or if data science is for you.

- We promise, no matter what your prior experience is, the answer is yes, you belong!

- Please come to office hours (see the schedule here) and post on Campuswire for help – we're here to make sure you succeed in this course.

The data: US states 🗽¶

We'll continue working with the same data from last time.

states = bpd.read_csv('data/states.csv')

states = states.assign(Density=states.get('Population') / states.get('Land Area'))

states = states.set_index('State')

states

| Region | Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|---|

| State | ||||||

| Alabama | South | Montgomery | 5024279 | 50645 | Republican | 99.21 |

| Alaska | West | Juneau | 733391 | 570641 | Republican | 1.29 |

| Arizona | West | Phoenix | 7151502 | 113594 | Republican | 62.96 |

| ... | ... | ... | ... | ... | ... | ... |

| West Virginia | South | Charleston | 1793716 | 24038 | Republican | 74.62 |

| Wisconsin | Midwest | Madison | 5893718 | 54158 | Republican | 108.82 |

| Wyoming | West | Cheyenne | 576851 | 97093 | Republican | 5.94 |

50 rows × 6 columns

Example 5: Which states are in the West?¶

Key concept: Querying.

The problem¶

We want to create a DataFrame consisting of only the states whose 'Region' is 'West'. How do we do that?

The solution¶

# This DataFrame only contains rows where the 'Region' is 'West'!

only_west = states[states.get('Region') == 'West']

only_west

| Region | Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|---|

| State | ||||||

| Alaska | West | Juneau | 733391 | 570641 | Republican | 1.29 |

| Arizona | West | Phoenix | 7151502 | 113594 | Republican | 62.96 |

| California | West | Sacramento | 39538223 | 155779 | Democratic | 253.81 |

| ... | ... | ... | ... | ... | ... | ... |

| Utah | West | Salt Lake City | 3271616 | 82170 | Republican | 39.82 |

| Washington | West | Olympia | 7705281 | 66456 | Democratic | 115.95 |

| Wyoming | West | Cheyenne | 576851 | 97093 | Republican | 5.94 |

13 rows × 6 columns

🤯 What just happened?

Aside: Booleans¶

- When we compare two values, the result is either

TrueorFalse.- Notice, these words are not in quotes.

boolis a data type in Python, just likeint,float, andstr.- It stands for "Boolean", named after George Boole, an early mathematician.

- There are only two possible Boolean values:

TrueorFalse.- Yes or no.

- On or off.

- 1 or 0.

5 == 6

False

type(5 == 6)

bool

9 + 10 < 21

True

Comparison operators¶

There are several types of comparisons we can make.

| symbol | meaning |

|---|---|

== |

equal to |

!= |

not equal to |

< |

less than |

<= |

less than or equal to |

> |

greater than |

>= |

greater than or equal to |

When comparing an entire Series to a single value, the result is a Series of bools (via broadcasting).

states

| Region | Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|---|

| State | ||||||

| Alabama | South | Montgomery | 5024279 | 50645 | Republican | 99.21 |

| Alaska | West | Juneau | 733391 | 570641 | Republican | 1.29 |

| Arizona | West | Phoenix | 7151502 | 113594 | Republican | 62.96 |

| ... | ... | ... | ... | ... | ... | ... |

| West Virginia | South | Charleston | 1793716 | 24038 | Republican | 74.62 |

| Wisconsin | Midwest | Madison | 5893718 | 54158 | Republican | 108.82 |

| Wyoming | West | Cheyenne | 576851 | 97093 | Republican | 5.94 |

50 rows × 6 columns

states.get('Region') == 'West'

State

Alabama False

Alaska True

Arizona True

...

West Virginia False

Wisconsin False

Wyoming True

Name: Region, Length: 50, dtype: bool

What is a query? 🤔¶

- A query is code that extracts rows from a DataFrame for which certain condition(s) are true.

- We use queries to filter DataFrames to contain only the rows that satisfy given conditions.

How do we query a DataFrame?¶

To select only certain rows of states:

- Make a sequence (list/array/Series) of

Trues (keep) andFalses (toss), usually by making a comparison. - Then pass it into

states[sequence_goes_here].

states[states.get('Region') == 'West']

| Region | Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|---|

| State | ||||||

| Alaska | West | Juneau | 733391 | 570641 | Republican | 1.29 |

| Arizona | West | Phoenix | 7151502 | 113594 | Republican | 62.96 |

| California | West | Sacramento | 39538223 | 155779 | Democratic | 253.81 |

| ... | ... | ... | ... | ... | ... | ... |

| Utah | West | Salt Lake City | 3271616 | 82170 | Republican | 39.82 |

| Washington | West | Olympia | 7705281 | 66456 | Democratic | 115.95 |

| Wyoming | West | Cheyenne | 576851 | 97093 | Republican | 5.94 |

13 rows × 6 columns

What if the condition isn't satisfied?¶

states[states.get('Region') == 'Pacific Northwest']

| Region | Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|---|

| State |

Example 6: What proportion of US states are Republican?¶

Key concept: Shape of a DataFrame.

Strategy¶

- Query to extract a DataFrame of just the states where the

'Party'is'Republican'. - Count the number of such states.

- Divide by the total number of states.

only_rep = states[states.get('Party') == 'Republican']

only_rep

| Region | Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|---|

| State | ||||||

| Alabama | South | Montgomery | 5024279 | 50645 | Republican | 99.21 |

| Alaska | West | Juneau | 733391 | 570641 | Republican | 1.29 |

| Arizona | West | Phoenix | 7151502 | 113594 | Republican | 62.96 |

| ... | ... | ... | ... | ... | ... | ... |

| West Virginia | South | Charleston | 1793716 | 24038 | Republican | 74.62 |

| Wisconsin | Midwest | Madison | 5893718 | 54158 | Republican | 108.82 |

| Wyoming | West | Cheyenne | 576851 | 97093 | Republican | 5.94 |

31 rows × 6 columns

Shape of a DataFrame¶

.shapereturns the number of rows and columns in a given DataFrame..shapeis not a method, so we don't use parentheses..shapeis an attribute, as it describes the DataFrame.

- Access each with

[]:.shape[0]for rows..shape[1]for columns.

only_rep.shape

(31, 6)

# Number of rows.

only_rep.shape[0]

31

# Number of columns.

only_rep.shape[1]

6

# What proportion of US states are Republican?

only_rep.shape[0] / states.shape[0]

0.62

Example 7: Which Midwestern state has the most land area?¶

Key concepts: Working with the index. Combining multiple steps.

Strategy¶

- Query to extract a DataFrame of just the states in the

'Midwest'. - Sort by

'Land Area'in descending order. - Extract the first element from the index.

midwest = states[states.get('Region') == 'Midwest']

midwest

| Region | Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|---|

| State | ||||||

| Illinois | Midwest | Springfield | 12812508 | 55519 | Democratic | 230.78 |

| Indiana | Midwest | Indianapolis | 6785528 | 35826 | Republican | 189.40 |

| Iowa | Midwest | Des Moines | 3190369 | 55857 | Republican | 57.12 |

| ... | ... | ... | ... | ... | ... | ... |

| Ohio | Midwest | Columbus | 11799448 | 40861 | Republican | 288.77 |

| South Dakota | Midwest | Pierre | 886667 | 75811 | Republican | 11.70 |

| Wisconsin | Midwest | Madison | 5893718 | 54158 | Republican | 108.82 |

12 rows × 6 columns

midwest_sorted = midwest.sort_values(by='Land Area', ascending=False)

midwest_sorted

| Region | Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|---|

| State | ||||||

| Kansas | Midwest | Topeka | 2937880 | 81759 | Republican | 35.93 |

| Minnesota | Midwest | Saint Paul | 5706494 | 79627 | Democratic | 71.67 |

| Nebraska | Midwest | Lincoln | 1961504 | 76824 | Republican | 25.53 |

| ... | ... | ... | ... | ... | ... | ... |

| Wisconsin | Midwest | Madison | 5893718 | 54158 | Republican | 108.82 |

| Ohio | Midwest | Columbus | 11799448 | 40861 | Republican | 288.77 |

| Indiana | Midwest | Indianapolis | 6785528 | 35826 | Republican | 189.40 |

12 rows × 6 columns

- The answer is Kansas, but how do we get it in code?

midwest_sorted.get('State').iloc[0]

--------------------------------------------------------------------------- KeyError Traceback (most recent call last) Cell In[18], line 1 ----> 1 midwest_sorted.get('State').iloc[0] File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/babypandas/utils.py:20, in suppress_warnings.<locals>.wrapper(*args, **kwargs) 18 with warnings.catch_warnings(): 19 warnings.simplefilter("ignore") ---> 20 return func(*args, **kwargs) File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/babypandas/bpd.py:328, in DataFrame.get(self, key) 326 if any(mask): 327 k = [key] if isinstance(key, str) else key --> 328 raise KeyError("{} not found in columns".format(np.array(k)[mask])) 330 f = _lift_to_pd(self._pd.get) 331 return f(key=key) KeyError: "['State'] not found in columns"

Working with the index¶

- We can't use

.getbecause.getis only for columns, and there is no column called'State'.- Instead,

'State'is the index of the DataFrame.

- Instead,

- To extract the index of a DataFrame, use

.index.- Like

.shape, this is an attribute of the DataFrame, not a method. Don't use parentheses.

- Like

- Access particular elements in the index with

[].

midwest_sorted.index

Index(['Kansas', 'Minnesota', 'Nebraska', 'South Dakota', 'North Dakota',

'Missouri', 'Michigan', 'Iowa', 'Illinois', 'Wisconsin', 'Ohio',

'Indiana'],

dtype='object', name='State')

midwest_sorted.index[0]

'Kansas'

Combining multiple steps¶

It is not necessary to define the intermediate variables

midwestandmidwest_sorted. We can do everything in one line of code.When solving a multi-step problem, develop your solution incrementally. Write one piece of code at a time and run it.

# Final solution, which you should build up one step at a time.

states[states.get('Region') == 'Midwest'].sort_values(by='Land Area', ascending=False).index[0]

'Kansas'

- If a line of code gets too long, enclose it in parentheses to split it over multiple lines.

# You can space your code out like this if needed.

(

states[states.get('Region') == 'Midwest']

.sort_values(by='Land Area', ascending=False)

.index[0]

)

'Kansas'

Concept Check ✅ – Answer at cc.dsc10.com¶

Which expression below evaluates to the total population of the 'West'?

A. states[states.get('Region') == 'West'].get('Population').sum()

B. states.get('Population').sum()[states.get('Region') == 'West']

C. states['West'].get('Population').sum()

D. More than one of the above.

...

Ellipsis

Example 8: What are the top three most-populated Republican states in the South?¶

Key concepts: Queries with multiple conditions. Selecting rows by position.

Multiple conditions¶

- To write a query with multiple conditions, use

&for "and" and|for "or".&: All conditions must be true.|: At least one condition must be true.

- You must use

(parentheses)around each condition! - 🚨 Don't use the Python keywords

andandorhere! They do not behave as you'd want.- See BPD 10.3 for an explanation.

states[(states.get('Party') == 'Republican') & (states.get('Region') == 'South')]

| Region | Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|---|

| State | ||||||

| Alabama | South | Montgomery | 5024279 | 50645 | Republican | 99.21 |

| Arkansas | South | Little Rock | 3011524 | 52035 | Republican | 57.87 |

| Florida | South | Tallahassee | 21538187 | 53625 | Republican | 401.64 |

| ... | ... | ... | ... | ... | ... | ... |

| Tennessee | South | Nashville | 6910840 | 41235 | Republican | 167.60 |

| Texas | South | Austin | 29145505 | 261232 | Republican | 111.57 |

| West Virginia | South | Charleston | 1793716 | 24038 | Republican | 74.62 |

13 rows × 6 columns

# You can also add line breaks within brackets.

states[(states.get('Party') == 'Republican') &

(states.get('Region') == 'South')]

| Region | Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|---|

| State | ||||||

| Alabama | South | Montgomery | 5024279 | 50645 | Republican | 99.21 |

| Arkansas | South | Little Rock | 3011524 | 52035 | Republican | 57.87 |

| Florida | South | Tallahassee | 21538187 | 53625 | Republican | 401.64 |

| ... | ... | ... | ... | ... | ... | ... |

| Tennessee | South | Nashville | 6910840 | 41235 | Republican | 167.60 |

| Texas | South | Austin | 29145505 | 261232 | Republican | 111.57 |

| West Virginia | South | Charleston | 1793716 | 24038 | Republican | 74.62 |

13 rows × 6 columns

The & and | operators work element-wise!¶

(states.get('Party') == 'Republican')

State

Alabama True

Alaska True

Arizona True

...

West Virginia True

Wisconsin True

Wyoming True

Name: Party, Length: 50, dtype: bool

(states.get('Region') == 'South')

State

Alabama True

Alaska False

Arizona False

...

West Virginia True

Wisconsin False

Wyoming False

Name: Region, Length: 50, dtype: bool

(states.get('Party') == 'Republican') & (states.get('Region') == 'South')

State

Alabama True

Alaska False

Arizona False

...

West Virginia True

Wisconsin False

Wyoming False

Length: 50, dtype: bool

Original Question: What are the top three most-populated Republican states in the South?¶

(

states[(states.get('Party') == 'Republican') &

(states.get('Region') == 'South')]

.sort_values(by='Population', ascending=False)

)

| Region | Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|---|

| State | ||||||

| Texas | South | Austin | 29145505 | 261232 | Republican | 111.57 |

| Florida | South | Tallahassee | 21538187 | 53625 | Republican | 401.64 |

| Georgia | South | Atlanta | 10711908 | 57513 | Republican | 186.25 |

| ... | ... | ... | ... | ... | ... | ... |

| Arkansas | South | Little Rock | 3011524 | 52035 | Republican | 57.87 |

| Mississippi | South | Jackson | 2961279 | 46923 | Republican | 63.11 |

| West Virginia | South | Charleston | 1793716 | 24038 | Republican | 74.62 |

13 rows × 6 columns

How do we extract the first three rows of this DataFrame?

Using .take to select rows by position¶

- Querying allows us to select rows that satisfy a certain condition.

- We can also select rows in specific positions with

.take(sequence_of_integer_positions). This keeps only the rows whose positions are in the specified sequence (list/array).- This is analogous to using

.iloc[]on a Series. - It's rare to need to select rows by integer position. Querying is far more useful.

- This is analogous to using

(

states[(states.get('Party') == 'Republican') &

(states.get('Region')=='South')]

.sort_values(by='Population', ascending=False)

.take([0, 1, 2])

)

| Region | Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|---|

| State | ||||||

| Texas | South | Austin | 29145505 | 261232 | Republican | 111.57 |

| Florida | South | Tallahassee | 21538187 | 53625 | Republican | 401.64 |

| Georgia | South | Atlanta | 10711908 | 57513 | Republican | 186.25 |

.take(np.arange(3))could equivalently be used in place of.take([0, 1, 2]).

Extra Practice¶

Write code to answer each question below.

- What is the capital city of the state in the

'West'with the largest land area? - How many states in the

'Northeast'have more land area than an average US state? - What is the total population of the

'Midwest','South', and'Northeast?

✅ Click here to see the answers after you've attempted the problems on your own.

- What is the capital city of the state in the West with the largest land area?

states[states.get('Region') == 'West'].sort_values(by='Land Area', ascending=False).get('Capital City').iloc[0]

- How many states in the Northeast have more land area than an average US state?

states[(states.get('Region') == 'Northeast') &

(states.get('Land Area') > states.get('Land Area').mean())].shape[0]

- What is the total population of the Midwest, South, and Northeast?

states[(states.get('Region') == 'Midwest') |

(states.get('Region') == 'South') |

(states.get('Region') == 'Northeast')].get('Population').sum()

Alternate solution to 3:

states.get('Population').sum() - states[states.get('Region') == 'West'].get('Population').sum()

...

Ellipsis

Adjusting columns¶

Adjusting columns with .assign, .drop, and .get¶

- To rename a column, use

.assignto create a new column containing the same values as an existing column.- New columns are added on the right.

states.assign(Pop_in_millions=states.get('Population') / 1_000_000)

| Region | Capital City | Population | Land Area | Party | Density | Pop_in_millions | |

|---|---|---|---|---|---|---|---|

| State | |||||||

| Alabama | South | Montgomery | 5024279 | 50645 | Republican | 99.21 | 5.02 |

| Alaska | West | Juneau | 733391 | 570641 | Republican | 1.29 | 0.73 |

| Arizona | West | Phoenix | 7151502 | 113594 | Republican | 62.96 | 7.15 |

| ... | ... | ... | ... | ... | ... | ... | ... |

| West Virginia | South | Charleston | 1793716 | 24038 | Republican | 74.62 | 1.79 |

| Wisconsin | Midwest | Madison | 5893718 | 54158 | Republican | 108.82 | 5.89 |

| Wyoming | West | Cheyenne | 576851 | 97093 | Republican | 5.94 | 0.58 |

50 rows × 7 columns

- Then use

.drop(columns=list_of_column_labels)to drop any columns you no longer need.- Alternatively, use

.get(list_of_column_labels)to keep only certain columns. The columns will appear in the order you specify, so this is also useful for reordering columns!

- Alternatively, use

(

states

.assign(Pop_in_millions=states.get('Population') / 1_000_000)

.drop(columns=['Population'])

)

| Region | Capital City | Land Area | Party | Density | Pop_in_millions | |

|---|---|---|---|---|---|---|

| State | ||||||

| Alabama | South | Montgomery | 50645 | Republican | 99.21 | 5.02 |

| Alaska | West | Juneau | 570641 | Republican | 1.29 | 0.73 |

| Arizona | West | Phoenix | 113594 | Republican | 62.96 | 7.15 |

| ... | ... | ... | ... | ... | ... | ... |

| West Virginia | South | Charleston | 24038 | Republican | 74.62 | 1.79 |

| Wisconsin | Midwest | Madison | 54158 | Republican | 108.82 | 5.89 |

| Wyoming | West | Cheyenne | 97093 | Republican | 5.94 | 0.58 |

50 rows × 6 columns

(

states

.assign(Pop_in_millions=states.get('Population') / 1_000_000)

# .get() is also useful for reordering columns

.get(['Pop_in_millions', 'Capital City'])

)

| Pop_in_millions | Capital City | |

|---|---|---|

| State | ||

| Alabama | 5.02 | Montgomery |

| Alaska | 0.73 | Juneau |

| Arizona | 7.15 | Phoenix |

| ... | ... | ... |

| West Virginia | 1.79 | Charleston |

| Wisconsin | 5.89 | Madison |

| Wyoming | 0.58 | Cheyenne |

50 rows × 2 columns

Two ways to .get¶

- Getting a single column name gives a Series.

- Getting a

listof column names gives a DataFrame. (Even if the list has just one element!)

states.get('Capital City')

State

Alabama Montgomery

Alaska Juneau

Arizona Phoenix

...

West Virginia Charleston

Wisconsin Madison

Wyoming Cheyenne

Name: Capital City, Length: 50, dtype: object

states.get(['Capital City', 'Party'])

| Capital City | Party | |

|---|---|---|

| State | ||

| Alabama | Montgomery | Republican |

| Alaska | Juneau | Republican |

| Arizona | Phoenix | Republican |

| ... | ... | ... |

| West Virginia | Charleston | Republican |

| Wisconsin | Madison | Republican |

| Wyoming | Cheyenne | Republican |

50 rows × 2 columns

states.get(['Capital City'])

| Capital City | |

|---|---|

| State | |

| Alabama | Montgomery |

| Alaska | Juneau |

| Arizona | Phoenix |

| ... | ... |

| West Virginia | Charleston |

| Wisconsin | Madison |

| Wyoming | Cheyenne |

50 rows × 1 columns

Example 9: Which region is most populated?¶

Key concept: Grouping by one column.

Organizing states by region¶

We can find the total population of any one region using the tools we already have.

states[states.get('Region') == 'West'].get('Population').sum()

np.int64(78588572)

states[states.get('Region') == 'Midwest'].get('Population').sum()

np.int64(68985454)

But can we find the total population of every region all at the same time, without writing very similar code multiple times? Yes, there is a better way!

A new method: .groupby¶

Observe what happens when we use the .groupby method on states with the argument 'Region'.

states.get(['Region', 'Population']).groupby('Region').sum()

| Population | |

|---|---|

| Region | |

| Midwest | 68985454 |

| Northeast | 57609148 |

| South | 125576562 |

| West | 78588572 |

These populations (for the 'West' and 'Midwest') match the ones we found on the previous slide, except now we get the populations for all regions at the same time. What just happened? 🤯

An illustrative example: Pets 🐱 🐶🐹¶

Consider the DataFrame pets, shown below.

| Species | Color | Weight | Age | |

|---|---|---|---|---|

| 0 | dog | black | 40 | 5.0 |

| 1 | cat | golden | 15 | 8.0 |

| 2 | cat | black | 20 | 9.0 |

| 3 | dog | white | 80 | 2.0 |

| 4 | dog | golden | 25 | 0.5 |

| 5 | hamster | golden | 1 | 3.0 |

Let's see what happens under the hood when we run pets.groupby('Species').mean().

show_grouping_animation()

Let's try it out!¶

pets = bpd.DataFrame().assign(

Species=['dog', 'cat', 'cat', 'dog', 'dog', 'hamster'],

Color=['black', 'golden', 'black', 'white', 'golden', 'golden'],

Weight=[40, 15, 20, 80, 25, 1],

Age=[5, 8, 9, 2, 0.5, 3]

)

pets

| Species | Color | Weight | Age | |

|---|---|---|---|---|

| 0 | dog | black | 40 | 5.0 |

| 1 | cat | golden | 15 | 8.0 |

| 2 | cat | black | 20 | 9.0 |

| 3 | dog | white | 80 | 2.0 |

| 4 | dog | golden | 25 | 0.5 |

| 5 | hamster | golden | 1 | 3.0 |

pets[pets.get('Species') == 'hamster'].get(['Species', 'Weight', 'Age'])

| Species | Weight | Age | |

|---|---|---|---|

| 5 | hamster | 1 | 3.0 |

pets.get(['Species', 'Weight', 'Age']).groupby('Species').mean()

| Weight | Age | |

|---|---|---|

| Species | ||

| cat | 17.50 | 8.5 |

| dog | 48.33 | 2.5 |

| hamster | 1.00 | 3.0 |

It takes several steps to go from the original pets DataFrame to this grouped DataFrame, but we don't get to see any of Python's inner workings, just the final output.

Back to states: which region is most populated?¶

states

| Region | Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|---|

| State | ||||||

| Alabama | South | Montgomery | 5024279 | 50645 | Republican | 99.21 |

| Alaska | West | Juneau | 733391 | 570641 | Republican | 1.29 |

| Arizona | West | Phoenix | 7151502 | 113594 | Republican | 62.96 |

| ... | ... | ... | ... | ... | ... | ... |

| West Virginia | South | Charleston | 1793716 | 24038 | Republican | 74.62 |

| Wisconsin | Midwest | Madison | 5893718 | 54158 | Republican | 108.82 |

| Wyoming | West | Cheyenne | 576851 | 97093 | Republican | 5.94 |

50 rows × 6 columns

states.get(['Region', 'Population']).groupby('Region').sum()

| Population | |

|---|---|

| Region | |

| Midwest | 68985454 |

| Northeast | 57609148 |

| South | 125576562 |

| West | 78588572 |

# Note the use of .index – remember, the index isn't a column!

(

states

.groupby('Region')

.sum()

.sort_values(by='Population', ascending=False)

.index[0]

)

'South'

Using .groupby in general¶

In short, .groupby aggregates (collects) all rows with the same value in a specified column (e.g. 'Region') into a single row in the resulting DataFrame, using an aggregation method (e.g. .sum()) to combine values from different rows with the same value in the specified column.

To use .groupby:

- Choose a column to group by and column(s) to aggregate with

.get()..get(['Region', 'Population', ...])to select out the columns you want to group by and aggregate together.- Rule of thumb:

- Choose one categorical column to group by (in this case,

Region). - Choose one or more numeric columns to aggregate (

Population,Land Area, etc.) - Don't include non-numeric columns to aggregate unless your aggregation method doesn't perform arithmetic (e.g.

.count()is okay on string columns, but not.mean()).

- Choose one categorical column to group by (in this case,

- Make groups with

.groupby()..groupby(column_name)will gather rows which have the same value in the specified column (column_name).- In the resulting DataFrame, there will be one row for every unique value in that column.

- Call an aggregation method.

- The aggregation method will be applied within each group.

- The aggregation method is applied individually to each column.

- If it doesn't make sense to use the aggregation method on a column, you will get an error or nonsensical result.

- Common aggregation methods include

.count(),.sum(),.mean(),.median(),.max(), and.min().

Observations on grouping¶

- After grouping, the index changes. The new row labels are the group labels (i.e., the unique values in the column that we grouped on), sorted in ascending order.

states

| Region | Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|---|

| State | ||||||

| Alabama | South | Montgomery | 5024279 | 50645 | Republican | 99.21 |

| Alaska | West | Juneau | 733391 | 570641 | Republican | 1.29 |

| Arizona | West | Phoenix | 7151502 | 113594 | Republican | 62.96 |

| ... | ... | ... | ... | ... | ... | ... |

| West Virginia | South | Charleston | 1793716 | 24038 | Republican | 74.62 |

| Wisconsin | Midwest | Madison | 5893718 | 54158 | Republican | 108.82 |

| Wyoming | West | Cheyenne | 576851 | 97093 | Republican | 5.94 |

50 rows × 6 columns

states.get(['Region', 'Population']).groupby('Region').sum()

| Population | |

|---|---|

| Region | |

| Midwest | 68985454 |

| Northeast | 57609148 |

| South | 125576562 |

| West | 78588572 |

Tip: look for keywords "per," "for each," and "indexed by" when solving problems.

- The aggregation method is applied separately to each column. If it does not make sense to apply the aggregation method to a certain column, you can get an (long, scary-looking) error. It really helps to ask an AI tool to help translate these to regular English.

states.get(['Region', 'Party']).groupby('Region').mean()

--------------------------------------------------------------------------- TypeError Traceback (most recent call last) File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/groupby/groupby.py:1944, in GroupBy._agg_py_fallback(self, how, values, ndim, alt) 1943 try: -> 1944 res_values = self._grouper.agg_series(ser, alt, preserve_dtype=True) 1945 except Exception as err: File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/groupby/ops.py:873, in BaseGrouper.agg_series(self, obj, func, preserve_dtype) 871 preserve_dtype = True --> 873 result = self._aggregate_series_pure_python(obj, func) 875 npvalues = lib.maybe_convert_objects(result, try_float=False) File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/groupby/ops.py:894, in BaseGrouper._aggregate_series_pure_python(self, obj, func) 893 for i, group in enumerate(splitter): --> 894 res = func(group) 895 res = extract_result(res) File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/groupby/groupby.py:2461, in GroupBy.mean.<locals>.<lambda>(x) 2458 else: 2459 result = self._cython_agg_general( 2460 "mean", -> 2461 alt=lambda x: Series(x, copy=False).mean(numeric_only=numeric_only), 2462 numeric_only=numeric_only, 2463 ) 2464 return result.__finalize__(self.obj, method="groupby") File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/series.py:6570, in Series.mean(self, axis, skipna, numeric_only, **kwargs) 6562 @doc(make_doc("mean", ndim=1)) 6563 def mean( 6564 self, (...) 6568 **kwargs, 6569 ): -> 6570 return NDFrame.mean(self, axis, skipna, numeric_only, **kwargs) File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/generic.py:12485, in NDFrame.mean(self, axis, skipna, numeric_only, **kwargs) 12478 def mean( 12479 self, 12480 axis: Axis | None = 0, (...) 12483 **kwargs, 12484 ) -> Series | float: > 12485 return self._stat_function( 12486 "mean", nanops.nanmean, axis, skipna, numeric_only, **kwargs 12487 ) File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/generic.py:12442, in NDFrame._stat_function(self, name, func, axis, skipna, numeric_only, **kwargs) 12440 validate_bool_kwarg(skipna, "skipna", none_allowed=False) > 12442 return self._reduce( 12443 func, name=name, axis=axis, skipna=skipna, numeric_only=numeric_only 12444 ) File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/series.py:6478, in Series._reduce(self, op, name, axis, skipna, numeric_only, filter_type, **kwds) 6474 raise TypeError( 6475 f"Series.{name} does not allow {kwd_name}={numeric_only} " 6476 "with non-numeric dtypes." 6477 ) -> 6478 return op(delegate, skipna=skipna, **kwds) File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/nanops.py:147, in bottleneck_switch.__call__.<locals>.f(values, axis, skipna, **kwds) 146 else: --> 147 result = alt(values, axis=axis, skipna=skipna, **kwds) 149 return result File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/nanops.py:404, in _datetimelike_compat.<locals>.new_func(values, axis, skipna, mask, **kwargs) 402 mask = isna(values) --> 404 result = func(values, axis=axis, skipna=skipna, mask=mask, **kwargs) 406 if datetimelike: File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/nanops.py:720, in nanmean(values, axis, skipna, mask) 719 the_sum = values.sum(axis, dtype=dtype_sum) --> 720 the_sum = _ensure_numeric(the_sum) 722 if axis is not None and getattr(the_sum, "ndim", False): File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/nanops.py:1701, in _ensure_numeric(x) 1699 if isinstance(x, str): 1700 # GH#44008, GH#36703 avoid casting e.g. strings to numeric -> 1701 raise TypeError(f"Could not convert string '{x}' to numeric") 1702 try: TypeError: Could not convert string 'DemocraticRepublicanRepublicanRepublicanRepublicanDemocraticRepublicanRepublicanRepublicanRepublicanRepublicanRepublican' to numeric The above exception was the direct cause of the following exception: TypeError Traceback (most recent call last) Cell In[50], line 1 ----> 1 states.get(['Region', 'Party']).groupby('Region').mean() File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/babypandas/utils.py:20, in suppress_warnings.<locals>.wrapper(*args, **kwargs) 18 with warnings.catch_warnings(): 19 warnings.simplefilter("ignore") ---> 20 return func(*args, **kwargs) File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/babypandas/bpd.py:1514, in DataFrameGroupBy.mean(self) 1510 """ 1511 Compute mean of group. 1512 """ 1513 f = _lift_to_pd(self._pd.mean) -> 1514 return f() File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/babypandas/bpd.py:1596, in _lift_to_pd.<locals>.closure(*vargs, **kwargs) 1590 vargs = [x._pd if isinstance(x, types) else x for x in vargs] 1591 kwargs = { 1592 k: x._pd if isinstance(x, types) else x 1593 for (k, x) in kwargs.items() 1594 } -> 1596 a = func(*vargs, **kwargs) 1597 if isinstance(a, pd.DataFrame): 1598 return DataFrame(data=a) File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/groupby/groupby.py:2459, in GroupBy.mean(self, numeric_only, engine, engine_kwargs) 2452 return self._numba_agg_general( 2453 grouped_mean, 2454 executor.float_dtype_mapping, 2455 engine_kwargs, 2456 min_periods=0, 2457 ) 2458 else: -> 2459 result = self._cython_agg_general( 2460 "mean", 2461 alt=lambda x: Series(x, copy=False).mean(numeric_only=numeric_only), 2462 numeric_only=numeric_only, 2463 ) 2464 return result.__finalize__(self.obj, method="groupby") File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/groupby/groupby.py:2005, in GroupBy._cython_agg_general(self, how, alt, numeric_only, min_count, **kwargs) 2002 result = self._agg_py_fallback(how, values, ndim=data.ndim, alt=alt) 2003 return result -> 2005 new_mgr = data.grouped_reduce(array_func) 2006 res = self._wrap_agged_manager(new_mgr) 2007 if how in ["idxmin", "idxmax"]: File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/internals/managers.py:1488, in BlockManager.grouped_reduce(self, func) 1484 if blk.is_object: 1485 # split on object-dtype blocks bc some columns may raise 1486 # while others do not. 1487 for sb in blk._split(): -> 1488 applied = sb.apply(func) 1489 result_blocks = extend_blocks(applied, result_blocks) 1490 else: File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/internals/blocks.py:395, in Block.apply(self, func, **kwargs) 389 @final 390 def apply(self, func, **kwargs) -> list[Block]: 391 """ 392 apply the function to my values; return a block if we are not 393 one 394 """ --> 395 result = func(self.values, **kwargs) 397 result = maybe_coerce_values(result) 398 return self._split_op_result(result) File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/groupby/groupby.py:2002, in GroupBy._cython_agg_general.<locals>.array_func(values) 1999 return result 2001 assert alt is not None -> 2002 result = self._agg_py_fallback(how, values, ndim=data.ndim, alt=alt) 2003 return result File ~/repos/dsc10/dsc10-2026-wi-private/.venv/lib/python3.14/site-packages/pandas/core/groupby/groupby.py:1948, in GroupBy._agg_py_fallback(self, how, values, ndim, alt) 1946 msg = f"agg function failed [how->{how},dtype->{ser.dtype}]" 1947 # preserve the kind of exception that raised -> 1948 raise type(err)(msg) from err 1950 dtype = ser.dtype 1951 if dtype == object: TypeError: agg function failed [how->mean,dtype->object]

Or, you can get a nonsensical result:

# whoa, what happened here?

states.get(['Region', 'Party']).groupby('Region').sum()

| Party | |

|---|---|

| Region | |

| Midwest | DemocraticRepublicanRepublicanRepublicanRepubl... |

| Northeast | DemocraticDemocraticDemocraticDemocraticDemocr... |

| South | RepublicanRepublicanDemocraticRepublicanRepubl... |

| West | RepublicanRepublicanDemocraticDemocraticDemocr... |

- Since the aggregation method is applied to each column separately, the rows of the resulting DataFrame need to be interpreted with care.

states.groupby('Region').max()

| Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|

| Region | |||||

| Midwest | Topeka | 12812508 | 81759 | Republican | 288.77 |

| Northeast | Trenton | 20201249 | 47126 | Republican | 1263.12 |

| South | Tallahassee | 29145505 | 261232 | Republican | 636.37 |

| West | Santa Fe | 39538223 | 570641 | Republican | 253.81 |

12812508 / 81759 == 288.77

False

- The column names don't make sense after grouping with the

.count()aggregation method.

states.groupby('Region').count()

| Capital City | Population | Land Area | Party | Density | |

|---|---|---|---|---|---|

| Region | |||||

| Midwest | 12 | 12 | 12 | 12 | 12 |

| Northeast | 9 | 9 | 9 | 9 | 9 |

| South | 16 | 16 | 16 | 16 | 16 |

| West | 13 | 13 | 13 | 13 | 13 |

Dropping, renaming, and reordering columns¶

Consider dropping unneeded columns and renaming columns as follows:

- Use

.assignto create a new column containing the same values as the old column(s). - Use

.drop(columns=list_of_column_labels)to drop the old column(s).- Alternatively, use

.get(list_of_column_labels)to keep only the columns in the given list. The columns will appear in the order you specify, so this is also useful for reordering columns!

- Alternatively, use

states_by_region = states.groupby('Region').count()

states_by_region = states_by_region.assign(

States=states_by_region.get('Capital City')

).get(['States'])

states_by_region

| States | |

|---|---|

| Region | |

| Midwest | 12 |

| Northeast | 9 |

| South | 16 |

| West | 13 |

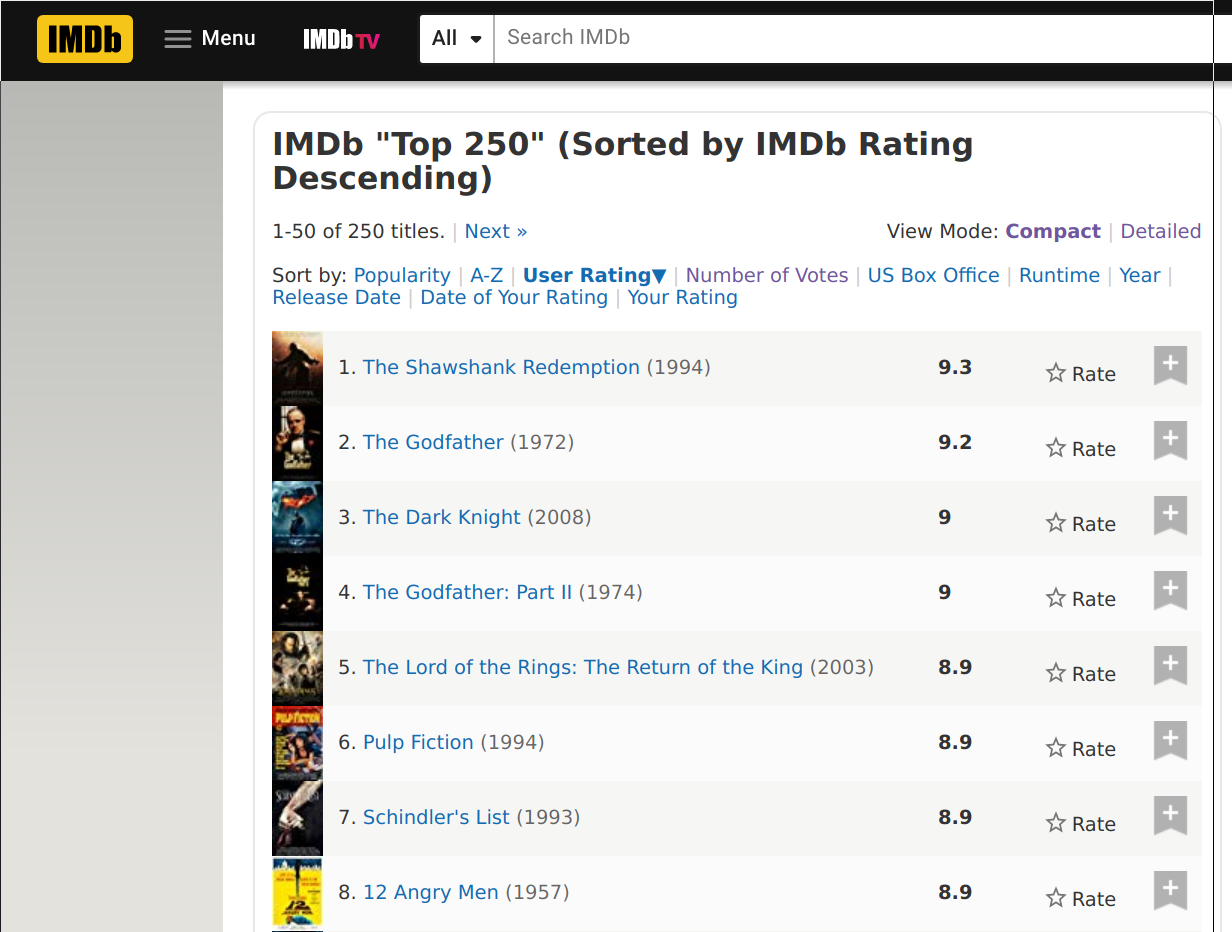

Example Exam Problems: IMDb dataset 🎞️¶

Extra practice¶

We won't cover this section in class. Instead, it's here for you to practice with some harder examples that are typical of what we will ask in exams or quizzes.

The video below walks through the solutions (it's also linked here). You can also see the solutions by clicking the "✅ Click here to see the answer." button below each question.

from IPython.display import YouTubeVideo

YouTubeVideo('xg7rnjWnZ48')

Before watching the video or looking at the solutions, make sure to try these problems on your own – they're great prep for homeworks, projects, and exams! Feel free to ask about them in office hours or on Ed.

imdb = bpd.read_csv('data/imdb.csv').set_index('Title').sort_values(by='Rating')

imdb

| Votes | Rating | Year | Decade | |

|---|---|---|---|---|

| Title | ||||

| Akira | 91652 | 8.0 | 1988 | 1980 |

| Per un pugno di dollari | 124671 | 8.0 | 1964 | 1960 |

| Guardians of the Galaxy | 527349 | 8.0 | 2014 | 2010 |

| ... | ... | ... | ... | ... |

| The Godfather: Part II | 692753 | 9.0 | 1974 | 1970 |

| The Shawshank Redemption | 1498733 | 9.2 | 1994 | 1990 |

| The Godfather | 1027398 | 9.2 | 1972 | 1970 |

250 rows × 4 columns

Question: How many movies appear from each decade?¶

imdb.groupby('Decade').count()

| Votes | Rating | Year | |

|---|---|---|---|

| Decade | |||

| 1920 | 4 | 4 | 4 |

| 1930 | 7 | 7 | 7 |

| 1940 | 14 | 14 | 14 |

| ... | ... | ... | ... |

| 1990 | 42 | 42 | 42 |

| 2000 | 50 | 50 | 50 |

| 2010 | 29 | 29 | 29 |

10 rows × 3 columns

# We'll learn how to make plots like this in the next lecture!

imdb.groupby('Decade').count().plot(y='Year');

Question: What was the highest rated movie of the 1990s?¶

Let's try to do this two different ways.

Without grouping¶

imdb[imdb.get('Decade') == 1990].sort_values('Rating', ascending=False).index[0]

'The Shawshank Redemption'

Note: The command to extract the index of a DataFrame is .index - no parentheses! This is different than the way we extract columns, with .get(), because the index is not a column.

With grouping¶

imdb.reset_index().groupby('Decade').max()

| Title | Votes | Rating | Year | |

|---|---|---|---|---|

| Decade | ||||

| 1920 | The Kid | 98794 | 8.3 | 1927 |

| 1930 | The Wizard of Oz | 259235 | 8.5 | 1939 |

| 1940 | The Treasure of the Sierra Madre | 350551 | 8.6 | 1949 |

| ... | ... | ... | ... | ... |

| 1990 | Unforgiven | 1498733 | 9.2 | 1999 |

| 2000 | Yip Man | 1473049 | 8.9 | 2009 |

| 2010 | X-Men: Days of Future Past | 1271949 | 8.7 | 2015 |

10 rows × 4 columns

- It turns out that this method does not yield the correct answer.

- When we use an aggregation method (e.g.

.max()), aggregation is done to each column individually. - While it's true that the highest rated movie from the 1990s has a rating of 9.2, that movie is not Unforgiven – instead, Unforgiven is the movie that's the latest in the alphabet among all movies from the 1990s.

- Taking the

maxis not helpful here.

Question: How many years have more than 3 movies rated above 8.5?¶

✅ Click here to see the answer.

good_movies_per_year = imdb[imdb.get('Rating') > 8.5].groupby('Year').count()

good_movies_per_year[good_movies_per_year.get('Votes') > 3].shape[0]

As mentioned below, you can also use:

(good_movies_per_year.get('Votes') > 3).sum()

Aside: Using .sum() on a boolean array¶

- Summing a boolean array gives a count of the number of

Trueelements because Python treatsTrueas 1 andFalseas 0. - Can you use that fact here?

Question: Out of the years with more than 3 movies, which had the highest average rating?¶

✅ Click here to see the answer.

# A Series of Trues and Falses; True when there were at least 3 movies on the list from that year

more_than_3_ix = imdb.groupby('Year').count().get('Votes') > 3

# The year with the highest average rating, among the years with more than 3 movies

imdb.groupby('Year').mean()[more_than_3_ix].sort_values(by='Rating').index[-1]

Question: Which year had the longest movie titles, on average?¶

Hint: Use .str.len() on the column or index that contains the names of the movies.

✅ Click here to see the answer.

(

imdb.assign(title_length=imdb.index.str.len())

.groupby('Year').mean()

.sort_values(by='title_length')

.index[-1]

)

The year is 1964 – take a look at the movies from 1964 by querying!

Question: What is the average rating of movies from years that had at least 3 movies in the Top 250?¶

✅ Click here to see the answer.

# A Series of Trues and Falses; True when there were at least 3 movies on the list from that year

more_than_3_ix = imdb.groupby('Year').count().get('Votes') > 3

# The sum of the ratings of movies from years that had at least 3 movies on the list

total_rating = imdb.groupby('Year').sum()[more_than_3_ix].get('Rating').sum()

# The total number of movies from years that had at least 3 movies on the list

count = imdb.groupby('Year').count()[more_than_3_ix].get('Rating').sum()

# The correct answer

average_rating = total_rating / count

# Close, but incorrect:

# Doesn't account for the fact that different years have different numbers of movies on the list

close_but_wrong = imdb.groupby('Year').mean()[more_than_3_ix].get('Rating').mean()

Summary, next time¶

Summary¶

- To write queries that involve multiple conditions:

- Put parentheses around all conditions.

- Separate conditions using

&if you require all to be true, or|if you require at least one to be true.

df.groupby(column_name).agg_method()aggregates all rows with the same value forcolumn_nameinto a single row in the resulting DataFrame, usingagg_method()to combine values.- Common aggregation methods include

.count(),.sum(),.mean(),.median(),.max(), and.min(). - Tip: look for keywords "per," "for each," and "indexed by" when solving problems.

- Common aggregation methods include

Next time¶

A picture is worth a 1000 words – it's time to visualize!